European AI Regulation: Opportunities, Risks, and Future Scenarios from a Metropolitan Perspective

This document brings together the conclusions of the seminar “European AI Regulation. Opportunities, Risks, and Future Scenarios from a Metropolitan Perspective” which, held on 19 September 2024 at CIDOB, was attended by academics and experts—from public and private sectors—on the regulation and management of AI technologies. The seminar is part of CIDOB’s research and foresight programme in geopolitics and international relations, with support from the Barcelona Metropolitan Area. This programme aims to offer knowledge to the general and specialist public, to produce publications and stimulate debate, and to incorporate new foresight methodologies into the analysis of the today’s most urgent international challenges.

Social and technological innovation is crucial for responding to people’s needs and moving towards more inclusive and equitable local governments. Break-through technologies like artificial intelligence (AI) open up new opportunities for improving the provision of public services and managing some of the more urgent problems, among them sustainable mobility, energy transition, responsible use of water, and pollution reduction. In the coming years increasing use of AI will lead to transformation of the various branches of public administration, which will need to be prepared to deal with new challenges and risks associated with extensive use of algorithmic systems. It should be borne in mind, moreover, that local governments have a double responsibility as both “consumers” of technological solutions and “regulators” which must guarantee the development of a safe, ethical framework that would respect the laws currently in force, be compatible with social and cultural norms, and put people at the centre.

In this regard, the PAM 2024-2027 (Metropolitan Action Plan of the Metropolitan Area of Barcelona) has pinpointed AI as one of the main focuses of future metropolitan policies. Metropolitan cities must be prepared to adopt algorithm-based solutions and to manage the risks this might entail, but they must also promote policy measures that would allow them to compete, and to attract and retain talent in order to advance the consolidation of the Barcelona Metropolitan Area as a digital metropolis. All this should be done bearing in mind the regulatory context in which they operate which, in future, will be conditioned by the recently approved EU Regulation on Artificial Intelligence (known as the AI Act), and also by geopolitical trends on the global scale.

This CIDOB Briefing aims to contribute to reflection on how local governments should prepare so that they can successfully tackle the challenges associated with adoption of AI. In the first section, it analyses the implications for local governments and metropolitan areas of European AI regulation, while the second section presents the results of a forecasting exercise carried out in the seminar, which identified some desirable futures for the governance of artificial intelligence, the main factors of uncertainty that might condition these futures, and four future scenarios. The Briefing concludes with some reflections on how it might be possible to ensure that the strategies and political frameworks currently being established by the Metropolitan Area of Barcelona will be resilient and adaptable in the various potential directions that the processes of digital transformation might take at the local level.

The context: European AI regulation and its impact on local governments

After years of negotiation, on 13 June 2024, the European Union approved the European Regulation on Artificial Intelligence (AI Act) which, in this domain, establishes harmonised rules at the European level. The most outstanding characteristic of this regulation is that it seeks to establish a comprehensive AI legal framework throughout Europe with the aim of ensuring that AI systems are responsibly developed and used. In doing so, it adopts a risk-based approach. It prohibits uses that create an unacceptable risk because they are likely to violate fundamental rights (for example some systems of biometric classification, systems of cognitive behaviour manipulation, and social scoring systems), and regulates uses that entail a high risk (like critical transport infrastructure) or limited risk (like chatbots). In the case of AI models of general use, like ChatGPT, the AI Act takes into account the systemic risks that might derive from their large-scale use.

This European regulation generates new obligations for a wide range of actors, from companies and technology developers to governments and regulating entities, including local administrations. In particular, from now on, town and city councils will need to make sure that the AI systems they use or intend to use are properly classified, and they must also identify the risks that might result from their use. Moreover, when bidding to acquire this technology they must ensure that the systems obtained comply with the AI Act and other European regulations, among them the General Data Protection Regulation (Almonacid, 2024).1 It should also be noted that many algorithmic systems used by local governments could be classified as being of high or limited risk (Galceran-Vercher and Vidal, 2024). Examples would include systems used in the provision of essential public goods and services, those used to evaluate the eligibility of applicants for certain social services, and those used to improve traffic management and urban mobility.

Furthermore, the AI Act (Article 27) stipulates that, prior to their deployment, high-risk AI systems must be submitted to assessment of the impact their use might entail for basic rights. This means that local administrations will have to introduce checks on some applications, ideally on a recurring basis, with the aim of evaluating the algorithms as well as data sets to detect possible biases based on gender, language, origin, ethnicity, religious belief, age, educational level, physical and mental disability, health, and economic situation. Finally, the European regulations impose obligations regarding transparency and explainability of the algorithmic systems used, especially when they affect citizens’ rights. In this regard, public registers of algorithms, which are already being used by local governments in Amsterdam, Helsinki, Nantes, and Lyon, are very useful tools.

To sum up, the AI Act represents a significant challenge for local governments, which will have to adapt their processes, policies, and strategies in order to comply with these new stipulations. In this sense, a common concern is whether they will be able to implement this new regulatory framework, not because of a lack of willingness to do so but because of insufficient technical capacity Indeed, a widespread problem for local administrations is a deficiency of technical skills and specialist knowledge, not only about the complex mesh of European regulations but also about the very functioning of AI-based systems.2 Hence, there is an urgent need to encourage both digital literacy and specific AI literacy among both public employees and citizens. Only then can the full potential of AI be used responsibly.

How can we prepare public administrations for tomorrow? Identifying uncertainties and plausible scenarios

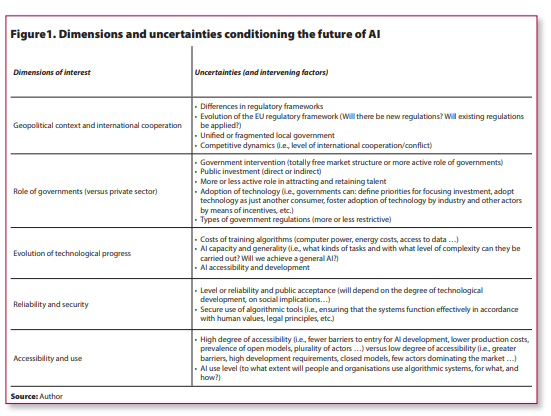

Artificial intelligence is a general-purpose technology, and this characteristic together with the fact that it has a very large number of applications gives rise to many uncertainties while also presenting difficulties for any forecasting exercise. These uncertainties spring not only from the development of the technology itself, but also from the evolution of the political and socioeconomic context in which its use occurs. Identifying them, and estimating the potential impact of their evolution on local governments make it possible to anticipate risks and opportunities for improving strategic planning and producing public policies. Presented below are five dimensions of interest and uncertainty that might have medium- to long-term effects on the development and management of algorithmic systems at the local level.

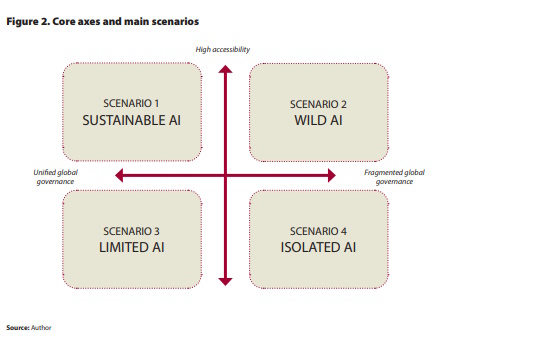

With these elements in mind and on the basis of a review of several forecasting exercises carried out in the past four years (specifically, PWC, 2020; United Kingdom Government Office for Science, 2023; and Economist Impact, 2024; OECD, 2024), two core axes (accessibility and geopolitical context) have been selected to assist in organising the construction of plausible scenarios on governing artificial intelligence in the coming decade.

Four specific hypothetical futures for the governance of AI in 2035 have been outlined: (1) Sustainable AI; (2) Wild AI; (3) Limited AI; and (4) Isolated AI. Before describing them, I believe that it is important to stress that these scenarios are not predictions of the future but, rather, have been conceived as instruments for debate and reflection, taking into account both desirable and undesirable elements of governance.

Scenario 1 – Sustainable AI

There is general consensus on a set of ethical principles and technical standards for the development of artificial intelligence. The EU has played an influential role in negotiating this unified governance framework, which has ended up including values such as privacy, transparency, and protection of basic rights. Most of the world’s governments have played very active roles: national AI strategies are multiplying, levels of public investment have risen significantly, and initiatives to promote responsible use of algorithmic systems are proliferating everywhere. The existence of regulatory and, to some extent, restrictive, frameworks has resulted in fewer AI applications being developed, but those that do appear on the market are more secure. Meanwhile, this has improved public perception of the safety of algorithmic systems, and these better levels of confidence have led to greater demand for, and adoption of these technologies. Finally, this scenario is presented in a context of high levels of accessibility to the development and use of algorithmic systems, which would explain the preference among developers (and the industry) for open source models.

Scenario 2 – Wild AI

All attempts over the last decade to reach global consensus on basic ethical principles and standards of technical harmonisation standards for the development of AI systems have failed. Accordingly, this is a world in which there coexist multiple heterogeneous policy frameworks, with different levels of maturity, and entailing various degrees of responsibility and obligations. Likewise, several AI regulatory blocs have been established among countries with shared values. A few countries have adopted national AI regulations and strategies, and there is some degree of public investment, although the role of national governments in the development of algorithmic systems is very modest, as industry and the large technological corporations predominate in the development and regulation of this break-through technology. Furthermore, in this scenario, the regulations promoted from the various levels of government are much less strict. In some cases, interpretation of data privacy laws is looser in order to facilitate innovation and experimentation. Regulatory fragmentation enables companies and smaller startups to explore market niches, and there is a certain prevalence of open source models. This situation of high levels of accessibility to the technology in the absence of a unified policy framework, and a lax regulatory approach leads to an uncontrolled proliferation of AI systems that engage in morally questionable practices, for example manipulation of behaviour. Moreover, it is very easy for malicious actors to gain access to enormously powerful AI systems, which increases the risk of accidents and cyberattacks, as well as an escalation of the deepfakes crisis. This feeling of insecurity and lack of control reduces levels of trust and safety in algorithmic systems, which then leads to moderate use by organisations and the population in general.

Scenario 3 –Limited AI

There is some degree of general consensus on the establishment of overall technical standards for the development of AI technology, but not on ethical principles. One must speak, then, of a moderately unified framework of global governance. In this situation, the EU has a very limited global influence since China and the United States dominate the world market. Some national regulations exist but they are mostly very lax. The levels of public investment in AI systems are very low and their development is basically led by industry. Closed source models prevail and participation of small and medium-sized companies in the market is scant. This is mostly explained by the high costs associated with the development of algorithmic systems, and also difficulties in complying with fragmented regulatory frameworks (only large companies can manage this), as well as lack of public investment. In this scenario, the degrees of public confidence concerning algorithmic systems and levels of confidence are noticeably moderate, which leads to relatively low levels of adoption by organisations and individuals.

Scenario 4 – Isolated AI

It has not been possible to reach consensus on promoting a framework of unified global AI governance. Hence, there is a fragmented mosaic of regulatory initiatives and models. This situation is also the case in Europe, where the Regulation on Artificial Intelligence (AI Act) has not been implemented satisfactorily, which means that the situation is one of considerable regulatory fragmentation, and this is leading to numerous problems deriving from the limited scalability of AI systems. In this scenario, local governments play a rather limited role. They neither regulate nor invest in the development of AI systems. On the contrary, it is industry that promotes initiatives of voluntary self-regulation and also decides which applications are to be developed. It does this, however, in keeping with commercial criteria that do not necessarily consider social benefits (or harm). This creates an environment of low-level accessibility to development and use of algorithmic systems, which is explained by a situation of complex, fragmented governance that—except for big companies with better resources—hampers scalability in the different contexts of many AI products. This, then, has repercussions in very low levels of adoption, as would be expected from a factious data milieu that results in low-quality and low-performance AI. Furthermore, many defective and insecure products are sold on the market, which lowers levels of confidence and use of algorithmic systems in quite a variety of organisations.

Risks, opportunities, and desirable future scenarios

As noted above, the construction of scenarios is understood not as an instrument for predicting the future, but as one to stimulate debate and reflection. To be specific, in the exercise carried out in the framework of this seminar, the aim was to find answers to three basic questions: (1) what futures might be more desirable than others?; (2) from the perspective of local government, what risks and opportunities might be expected to arise in each of these scenarios?; and (3) how might the risks associated with each of these scenarios be managed? To sum up, it is a matter of thinking about how, in a situation marked by many uncertainties, local governments might guarantee democratic supervision of the algorithmic systems that are used.

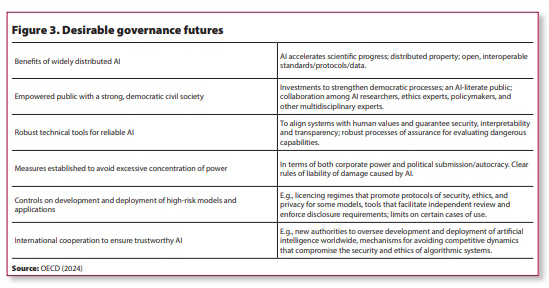

To begin with the first question, the one about desirable futures, it could be argued that the scenario of sustainable AI is that which possibly brings together more elements that might be favourable to responsible deployment of algorithmic systems on the local and metropolitan scales. These elements, which could be considered in the strategic reflection and policy advocacy processes that might be promoted by the Barcelona Metropolitan Area, are summarised below.

Besides identifying desirable futures and analysis of the different contexts that are outlined in the four scenarios presented, a series of implications for local governments arise. For example, it is plausible to imagine that, in a context in which there is a certain overall consensus on the ethical principles and/or technical standards that must guide the development of artificial intelligence, it would be easier to develop robust regulatory frameworks and improve the general acceptance of algorithmic systems. This would allow local governments to develop and deploy AI-based solutions for more secure urban management without encountering resistance from citizens.

In this scenario, public (and also local) administrations will have to establish viable frameworks for certification, audits, and checks that must ensure compliance with the different regulations. Hence, resources should be assigned for reinforcing the AI domain (national, regional, and local) and also for improving the technical skills of those with positions of responsibility in government agencies directly or indirectly involved in the deployment of algorithmic systems. This should also be a situation that is open to increased funding and technical training programmes for local governments so that they will be able to manage the responsibilities they will need to take on. It should also be open to the rising number of new occasions for collaborating with the private sector and universities.

On the other hand, in a context of fragmented global governance and uncontrolled expansion of algorithmic systems, governments may have to design strategies for integrating AI development efforts in a more unstable and uncertain environment. For this, they will need flexible, adaptable approaches that will aim to reduce unnecessary regulatory complexity. Then again, it is expected that cyberattacks will increase and (especially small and middle-sized) municipalities are not always prepared to deal with them. This is also the scenario that poses the most challenges for protection of data and the digital rights of citizens. This will accentuate the need to promote local frameworks of AI management that are aligned with democratic values.

Finally, we could think that a context of high levels of accessibility could possibly facilitate the spread of smaller and also local developers that would be able to adapt algorithmic systems to the specific needs of each municipality. Otherwise, in scenarios characterised by a prevalence of large multinationals, local governments would have less ability to acquire products that are tailored to their particular situations.

How to guarantee democratic oversight of algorithmic systems and satisfactory distribution of AI benefits?

There is general consensus on the urgent need to reform the present model of public administration in order to make it more efficient, transparent, and focused on citizens’ needs. The increasing accessibility of groundbreaking technologies like AI opens up new opportunities for advancing in this direction but only if the processes of digitalisation are accompanied by measures that will also change how the public administration works. In other words, it is necessary not only to digitalise but also to transform. Given the rising importance and growing popularity of artificial intelligence technologies, many local governments must manage two realities that coexist in a single organisation. One is excessive optimism about the potentialities of algorithmic systems to fix every problem, and the other is characterised by its apocalyptic discourse about the risks associated with the use of these technologies, which could slow down their adoption.

In these circumstances, it is more relevant than ever to try to provide answers to the question of how to guarantee democratic oversight of algorithmic systems, which would assure a satisfactory distribution of the benefits associated with AI use by local administrations. A first suggestion in this regard would be to shift the debate—about whether it is necessary to regulate or not—to a focus, first on having good regulations and, second, on ensuring effective implementation of those already in place. For example, there is no need to wait for the AI Act to ban social scoring systems because other European regulations, like the General Data Protection Regulation, already prohibit such practices. Then again, several experts have repeatedly warned that some requirements of European regulations can only be applied by large corporations, and not by small and medium-sized companies. This warning can be extrapolated to local governments since highly demanding regulations can mean a high percentage of non-compliance. Indeed, in some cases, it might even be desirable for the public administration to accept the reasonable risks entailed by any process of digital transformation.

Meanwhile, to ensure effective implementation of European, national, and regional regulations, it is important to have regulatory bodies (like the European Centre for Algorithmic Transparency (ECAT) and the Spanish National Agency for Supervision of Artificial Intelligence) that would function to the maximum of their potential. It is also to be desired that they could offer technical assistance and practical guidance specifically designed for local governments. In this regard, it is necessary to equip local administrations with more practical tools that would guarantee responsible use of algorithmic systems. For example, many bodies have AI governance strategies but few local administrations have introduced public registers of algorithms or carried out ethical evaluations of data and artificial intelligence systems.3

Finally, no effort should be spared in educational and awareness-raising actions that aim to improve general understanding of AI and its impact on both municipal workers and citizens, and to encourage initiatives that would assure their participation in safe and ethical deployment of algorithmic systems. Such actions need not necessarily be carried out by the public administration because it frequently does not have either tools or the appropriate knowledge. It might, instead, be much more effective to encourage alliances with organisations like Xnet and CIVICAi, with a proven track record in defending digital rights, the protection of which is more important than ever, whatever the AI future towards which we end up advancing.

Bibliography

Almonacid, V. (2024) “Reglamento (europeo) de Inteligencia Artificial: impacto y obligaciones que genera en los Ayuntamientos”. El Consultor de los Ayuntamientos, 15 de Julio de 2024, LA LEY. Diario LA LEY, No 10553, Sección Tribuna, 24 de Julio de 2024, LA LEY

Ben Dhaou, S., Isagah, T., Distor, C., and Ruas, I.C. (2024). Global Assessment of Responsible Artificial Intelligence in Cities: Research and recommendations to leverage AI for people- centred smart cities. Nairobi, Kenya. United Nations Human Settlements Programme (UN-Habitat)

Economist Impact (2024) AI landscapes: Exploring future scenarios of AI through to 2030. London: Economist impact. Online at: https://impact.economist.com/projects/what-next-for-ai/ai-landscapes/

Galceran-Vercher, M., and Vidal, A. (2024) “Mapping urban artificial intelligence: first report of GOUAI’s Atlas of Urban AI”. CIDOB Briefings, No. 56. Online at: https://www.cidob.org/en/publications/mapping-urban-artificial-intelligence-first-report-gouais-atlas-urban-ai

OECD (2024) “Assessing potential future artificial intelligence risks, benefits and policy imperatives”. OECD Artificial Intelligence Papers, November 2024, No. 27.

PWC (2020) The many futures of Artificial Intelligence: Scenarios of what AI could look like in the EU by 2025. March 2020

United Kingdom Government Office for Science (2023) “Future Risks of Frontier A: Which capabilities and risks could emerge at the cutting edge of AI in the future?”. Department for Science, Innovation and Technology. Government of the United Kingdom. October 2023. Online at https://assets.publishing.service.gov.uk/media/653bc393d10f3500139a6ac5/future-risks-of-frontier-ai-annex-a.pdf (last access, 30 November 2024)

Notas:

1-This is the sister regulation of the General Data Protection Regulation (GDPR) of May 2018, as there can be no AI without data.

2- A recent study indicates that up to 20% of chief technology managers and directors and more than 55% of civil servants in a sample of 122 cities around the world are working on AI projects without the required knowledge and prior experience (Dhaou et al., 2024).

3- The PIO (Principles, Indicators, and Observables) self-assessment model designed by the Observatory for Ethics in Artificial Intelligence of Catalonia (OEIAC) could easily be implemented by local administrations, for example.

All the publications express the opinions of their individual authors and do not necessarily reflect the views of CIDOB as an institution