Artificial intelligence and cities: The global race to regulate algorithms

There is a growing need to ensure that fundamental rights are not compromised by the development and use of artificial intelligence (AI) applications. A global race to set the standards that should govern and regulate AI is underway between global powers, forums and organisations, as well businesses and public and private institutions.

The fragmentation of the global regulatory environment has led cities to position themselves as influential normative actors.

Cities take on regulatory roles both individually, by developing local governance standards and initiatives, and collectively, within the frameworks of city alliances like CC4DR and Eurocities.

Artificial intelligence (AI) is no longer merely the stuff of science fiction – it became a reality long before the debates over ChatGPT filled the public discourse in late 2022. Like electricity and the internet, AI is set to be among the most powerful transformational forces of our times, with the potential to revolutionise all industries and economic sectors. Its applications are infinite: from algorithmic decision-making and mass processing of large data sets to natural language and voice processing systems, risk predictions, and even controversial biometric recognition. Most of these applications are already being used in sectors as diverse as justice, human resources management, financial services, mobility, healthcare and public services provision.

It is no accident that AI investments have surged around the world, or that governments are increasingly including the concept in their national security strategies. It should also come as no surprise that a competition to dominate AI development has emerged, producing a kind of global race for AI, in which both great powers and major technology platforms explicitly participate. Back in 2017, Vladimir Putin warned that whoever leads in artificial intelligence will rule the world; since then the race has only accelerated.

AI systems are much more than mere components of software. In fact, the socio-technical system around them is at least as important, if not more so. Any debate over AI and its governance should thus consider the organisations that create, develop, implement, use and control AI, as well as the individuals likely to be affected by it and any new social relations it may generate. This is because AI systems have highly significant ethical and legal implications due to their potential to impact a wide range of fundamental rights, such as around privacy, non-discrimination and data protection. What is more, AI can – and already does – have adverse effects on democracy and the rule of law. In particular, it has the potential to influence social and political discourse, manipulate public opinion via the production and spread of social network content, filter access to information, and generate new inequalities.

In this context, governments are increasingly urged not only to drive policies that stimulate AI innovation, but also to take measures to protect our societies from the risks that may arise from using this disruptive technology. Hence, the race to develop AI has been joined by the “race to AI regulation”, in which the first to act can gain substantial competitive advantage. National governments are not alone on this new playing field. Their slowness to act means cities are once again positioning themselves as indispensable actors – this time in the development of ethical and responsible AI.

A fragmented global context

Today, global AI governance takes place via a multitude of regulatory frameworks that are fragmented, heterogeneous, dispersed and backed by a variety of actors. All the major powers have published their strategies for promoting the use and development of AI and since 2016 they have begun to coordinate their endeavours (largely unsuccessfully) within intergovernmental frameworks like the G7 and the G20. Some international organisations, including UNESCO and the Organisation for Economic Co-operation and Development (OECD), have joined the race to draw up global regulatory standards for AI, as have many businesses, research institutes, civil society organisations and subnational governments.

The fragmentation of the global landscape may be attributed to a range of causes, including the ambiguity of “artificial intelligence” as a concept.1 It is also worth noting that the technology governance models promoted by the world’s three major regional blocs (the United States, China and the European Union [EU]) are fundamentally different, as is the absence of a genuinely global regulatory framework promoted by international organisations. Added to this are the issues juggling the differing interests, norms and codes of conduct of private companies (the main developers of the technology), governments and public opinion.

As with other spheres of global technology governance, significant ideological differences exist between the United States, China and the EU when it comes to data regulation and AI governance. The geopolitical ramifications of this clash of models is greatly conditioning the actions of the other players in the international system. It therefore makes sense to try to understand the key differences between these three approaches, which can broadly be described as capitalist self-regulation (United States), techno-authoritarianism (China) and comprehensive rights-based regulation (EU).

The EU’s regulations

In many respects, the EU is pioneering the consumer protection- and rights-based approach to regulating the digital environment. Its General Data Protection Regulation (GDPR), which came into effect in 2018, set a precedent for data regulation worldwide,2 inspiring similar legislation in the United States and China. In the same vein, the Artificial Intelligence Act (AI Act) presented by the European Commission in 2021, and which remains in negotiation, is one of the most advanced regulatory frameworks in the field, and may shape a new global standard.

This legislative proposal must therefore be viewed in the context of the other initiatives that make up European policy on artificial intelligence, which began in 2018, and which include the strategy Artificial Intelligence for Europe (2018), the Ethics guidelines for trustworthy AI (2019) drawn up by the High Level Expert Group on Artificial Intelligence, and the White Paper on Artificial Intelligence (2020). But the AI Act, which could come into effect in 2024 and which represents a new and innovative regulation based on the potential risks of AI, alongside the Digital Markets Act (DMA) and the Digital Services Act (DSA), amount to a holistic approach to how authorities seek to govern the use of AI and information technology in society.

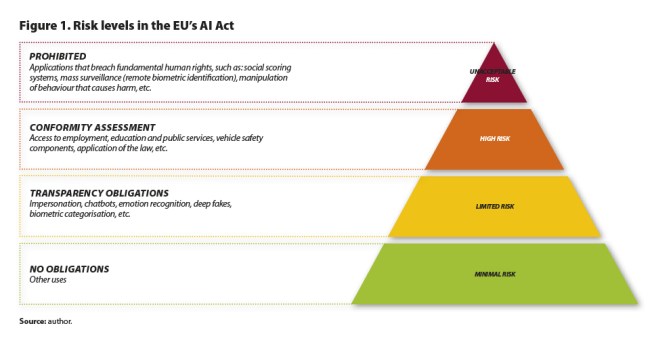

The AI Act pursues two fundamental objectives: a) regulating the use of AI so as to address both the benefits and risks of the technology; and b) creating a safe space for AI innovation that meets high levels of protection of the public interest, security and fundamental rights and liberties. It also seeks to build a trustworthy ecosystem that encourages the adoption of AI services. To do this, it takes a risk-based regulatory approach; in other words, the law imposes certain obligations and restrictions based on four levels of potential risk arising from the use of AI (see Figure 1).

Chinese regulation

In 2017, China adopted its “New Generation Artificial Intelligence Development Plan”, detailing the strategic objectives and principles that should guide AI development in a wide range of sectors. The aim was undoubtedly to make the country a global leader in the field. Since then, China has developed other mechanisms for regulating AI development, especially in algorithmic surveillance and data. Particularly noteworthy is the Personal Information Protection Law (PIPL) approved in 2021, which was to a large extent inspired by Europe’s GDPR. In March 2022 a new regulation came into force that aimed to monitor “recommendation algorithms” for internet search engines. The regulation grants users new rights, including the ability to choose not to use recommendation algorithms and to delete user data. But it also includes somewhat more opaque provisions on content moderation that require private companies to actively promote “positive” information that follows the official Communist Party line. China’s regulation of algorithms thus extends far beyond the digital realm, as it also dictates what kind of behaviour the Chinese government approves of in society. This approach, which many characterise as techno-authoritarianism, contrasts with that of the US.

US regulation

The US approach follows the logic of “surveillance capitalism”. Starting with data protection regulations, it is notable that the US currently has no federal law in this field that could be compared to Europe’s GDPR. Given the federal government’s inaction, five states (California, Colorado, Connecticut, Utah and Virginia) have adopted legislation of their own.3 In the field of artificial intelligence there is also no national-level legislation, although the Algorithmic Accountability Act (2022) is a first step in that direction.

In fact, the delayed development of national-level regulation may be down to the significant opposition among large swathes of society to AI being used in the public sector, particularly the use of the facial recognition technology by public security forces. In this sense, while the US and the EU differ on AI regulation (self-regulation vs comprehensive regulation), they share a desire to link regulation to the protection of fundamental digital rights. In the absence of national legislation that explicitly recognises such rights, some subnational bodies have begun to implement their own regulations, as explained below.

Cities’ collective normative power4

AI is expected to bring benefits at all levels, but it is in cities where most of the experimentation is taking place, being adopted, in most cases, alongside other technologies like the Internet of Things, 5G and Big Data. Urban settings are also where its effects are most noticeable. Many local governments already use AI to forecast demand for certain services, to anticipate problems, to communicate more rapidly with citizens via chatbots, to improve decision-making and to make progress on sustainability goals, above all in areas like air quality and mobility.

Nevertheless, the growing adoption of AI in urban settings does not come without challenges, particularly when it comes to the capacities cities require to take advantage of all their potential. Then there is the imperative of ensuring that the use of AI-based solutions meets security and liability standards, and that citizens’ digital rights are protected. Responding to these challenges requires actions that go far beyond local governments’ power to legislate. Nevertheless, a fragmented global regulatory environment containing glaring legal loopholes has led cities to position themselves as indispensable normative actors, both individually, by developing local standards, and collectively, within the frameworks of city networks and alliances.

In terms of collective action by cities, it is worth mentioning the work being done by the Cities Coalition for Digital Rights (CC4DR). This initiative, launched in 2018 by the cities of Barcelona, Amsterdam and New York, is supported by UN-Habitat and seeks to promote and defend digital rights in urban settings. Conceived as a pragmatic alliance founded on principles, the member cities (currently around 50) share best practices and expertise to address shared challenges linked to the formulation of policies based on digital rights. The coalition also works to draw up legal, ethical and operational frameworks that help cities to promote human rights in digital settings.

In July 2021, the CC4DR established the Global Observatory of Urban Artificial Intelligence (GOUAI) to promote ethical AI systems in cities.5 The GOUAI works towards three fundamental goals. First, it seeks to contribute to the definition of basic ethical principles that can guide the adoption of AI solutions in cities; specifically, ensuring that the algorithmic tools used in urban settings are fair, non-discriminatory, transparent, open, responsible, cybersecure, sustainable and that they safeguard citizens’ privacy. Second, it seeks to support the implementation of these principles by mapping leading ethical urban AI projects and strategies. These best practices, developed by cities around the world, can be consulted in the “Atlas of Urban AI” launched in 2022. Finally, the GOUAI seeks to disseminate these principles and best practices across the international urban community in order to promote an ethical approach to AI regulation from the local level.

By the same token, via municipalist associations, local governments are seeking political influence over the negotiation of transnational regulations like the EU’s AI Act. The role of Eurocities in particular stands out here. A network of major European cities, with over 200 members from 38 countries, in early 2020 it drew up a response to the European Commission’s White Paper on Artificial Intelligence, setting out the opportunities and challenges AI presents to European cities. Among other requests, it argued that local government representatives should be involved in the EU’s working group on artificial intelligence, that more funding should be allocated to developing digital abilities and literacy in municipal governments, and that the EU’s future regulatory framework for trustworthy AI should consider the ethical principles put forward by the CC4DR.

After the European Commission published its proposed AI law in 2021, Eurocities devoted substantial resources to promoting discussion among its members on the legislation’s relevance and implications for local governments. Specific amendments to the proposed regulation were then submitted on behalf of cities, and activities were organised as a way to continue influencing negotiations. A letter sent to the rapporteurs for the regulation is one specific example.

In terms of a common political stance in this area, cities generally support the broad definition of AI systems included in the AI Act, as well as the risk-based approach and the proposal to ban unacceptable uses. They nevertheless ask for a complete ban on mass biometric data collection systems in public spaces until respect for human rights can be verified. They also criticise the lack of alignment between AI systems and the EU’s General Data Protection Regulation (GDPR).

Regulating from the bottom up: local AI governance tools6

The limitations and deficiencies of most national laws in this area have led many cities to begin developing their own governance frameworks and normative instruments to ensure AI is used responsibly in their jurisdictions. Just like certain countries and international organisations, local governments are thus defining ethical normative frameworks and guiding principles for the municipal use of AI that is responsible and based on human rights. These ethical principles tend to be gathered in municipal declarations and strategies.

One good example is Barcelona’s “Municipal algorithms and data strategy for an ethical promotion of artificial intelligence”, published in 2021. It sets out an AI governance model that is based on digital rights and democratic principles. Certain measures stand out, including the creation of a public register of algorithms and the establishment of public procurement clauses that ensure that municipal AI systems respect human rights. New York is another pioneer city in this field. Its “AI Strategy” (October 2021)7 also calls for the city to benefit from using AI in an ethical and responsible manner that takes digital rights into account. In particular, the strategy describes how to modernise the city’s data infrastructure and defines the areas in which AI has the potential to bring the most benefits with the least possible damage, as well as ways the administration can use AI internally to serve citizens. Finally, the strategy considers the formation of alliances with research centres, as well as specific actions to guarantee that greater use of AI does not negatively impact New Yorkers’ digital rights.

In Latin America, the city of Buenos Aires stands out. Its “Plan de IA” (August 2021) aims to maximise AI’s benefits for running the city, to assist the evolution and consolidation of its use in strategic industries and areas that are key to the productive fabric and the government, as well as mitigating the potential risks deriving from the use of AI and defining ethical and legal principles like transparency, privacy, cybersecurity, respect for the environment, responsibility, human intervention and open government.

As well as these holistic strategies, some cities are promoting specific regulations to establish limits on the use of the AI in their communities, particularly where there is a risk that using automated systems could lead to discrimination or jeopardise fundamental rights. The New York City law on automated hiring systems is one example, which stipulates that any such systems used in the city after April 2023 must be subjected to a bias audit in order to assess any potential unequal impact on certain groups. Other examples include the regulations put in place by US cities like Boston, Minneapolis, San Francisco, Oakland and Portland that prohibit government agencies, including the police, from using facial recognition technologies. The basis for these bans are concerns about civil liberties violations and discrimination being embedded in these tools via biases in data and algorithms.

Finally, some local public policies may have as profound a normative impact as the norms mentioned above. The algorithm registers of Amsterdam and Helsinki are one such case. In this pioneering initiative the two cities publish information on public websites about the algorithmic systems used to provide certain services, thereby constructing a fundamental tool for guaranteeing transparency and accountability. Indeed, it has acquired such importance that another seven European cities (Barcelona, Bologna, Brussels, Eindhoven, Mannheim, Rotterdam and Sofia) have, with the support of Eurocities, joined forces with Amsterdam and Helsinki to develop a standardised “data schema” that establishes what data should be published on algorithm registers. In doing this, these cities establish themselves as pioneers in the race to regulate AI from the bottom up.

Final considerations

There is an increasingly urgent need to ensure that the development and use of AI does not compromise fundamental rights. This has led to a new global race to set the standards that govern and regulate AI. As regulatory initiatives of various types proliferate, a playing field has opened up on which great powers are competing, but so too are other actors, like intergovernmental organisations, large-scale technology platforms, civil society organisations and cities.

In a global context of regulatory fragmentation and clashing digital governance models, scaling up the regulations being adopted by many cities around the world can become a practical, agile and effective way to accelerate the adoption of AI solutions at all levels. Ultimately, just as cities are now unquestionably centres of innovation and spaces for experimenting with responses to major global challenges like climate change, so the frontiers of AI governance can begin to be defined from below.

It must, however, be underlined that these days the regulatory work done by cities on AI regulation is essentially a Global North phenomenon. It is significant, for example, that 90% of cities in the Cities Coalition for Digital Rights are from Europe or the United States. It is therefore worth asking whether the digital rights protection approach that guides most of these regulations could end up inspiring similar initiatives in settings where the prevailing logic is techno-authoritarianism.

References

Finnemore, Martha and Sikkink, Kathryn. “International Norm Dynamics and Political Change”. International Organization, vol. 52, no. 4 (1998), pp. 887–917.

Notes:

1- Despite many attempts, no agreed-upon definition of AI exists. Crucially, AI is not a single thing, but an umbrella term that takes in various technologies and applications. Complicating matters further, the applications being referred to as AI are continually evolving.

2- This is evident from the way the legislation has inspired other laws, such as the California Consumer Privacy Act (CCPA) and China’s Personal Information Protection Law (PIPL).

3- Notable among these is California’s Consumer Privacy Act (CCPA) which, in the absence of federal-level legislation, has become the de facto regulation in the sector.

4- This Nota Internacional does not use the terms “norm” and “normative power” in their legal sense (i.e. as legal rules established by a competent authority to bring order to behaviour via the creation of rights and obligations). Rather, it uses them in their senses from social constructivism and global governance, where norms are collective expectations or appropriate standards of behaviour (Finnemore and Sikkink, 1998).

5- The GOUAI is led by CIDOB’s Global Cities Programme.

6- The projects mentioned in this section form part of the GOUAI’s Atlas of Urban AI, which is available at: https://gouai.cidob.org/atlas

7- This strategy was drawn up during the previous mandate, while John Farmer was Chief Technology Officer of New York. The new team at the NYC Office of Technology and Innovation published its new Strategic Plan in October 2022, which, surprisingly, makes no reference to AI governance or regulation. See: https://www1.nyc.gov/assets/oti/downloads/pdf/about/strategic-plan-2022.pdf

DOI: https://doi.org/10.24241/NotesInt.2023/286/en